Belle Lab

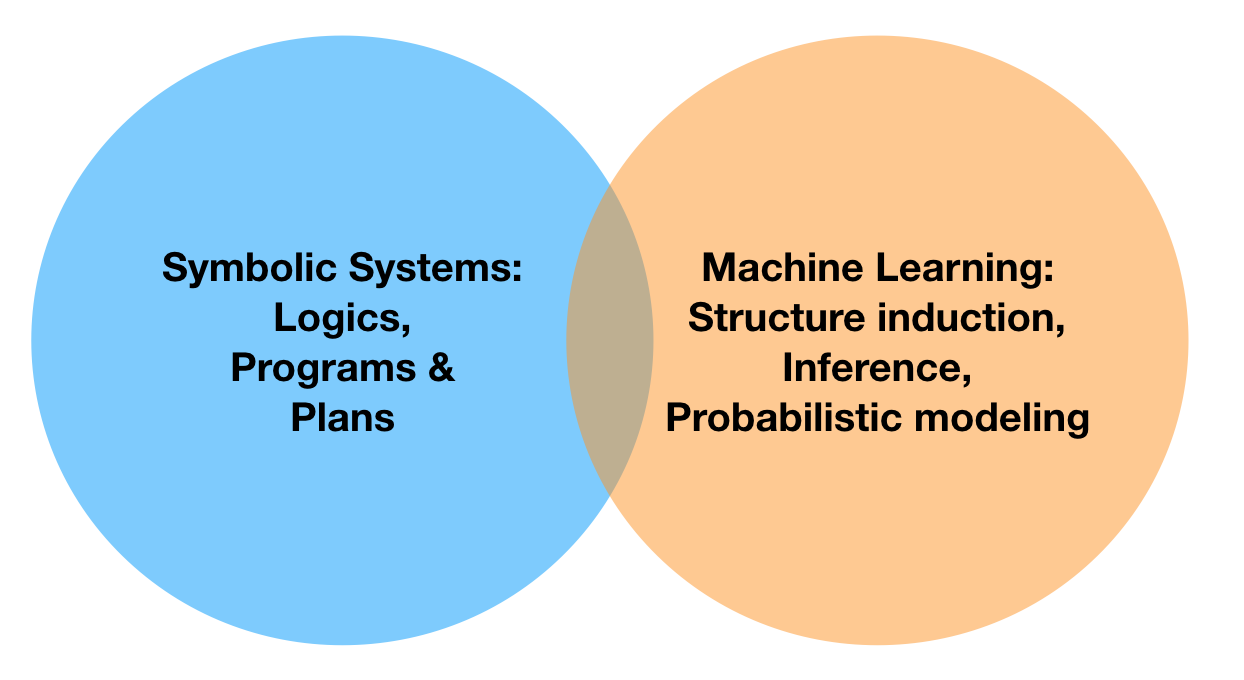

The Lab carries out research in artificial intelligence, by unifying learning and logic, with a recent emphasis on explainability and ethics.

We are motivated by the need to augment learning and perception with high-level structured, commonsensical knowledge, to enable systems to learn faster and more accurate models of the world. We are interested in developing computational frameworks that are able to explain their decisions, modular, re-usable, and robust to variations in problem description. A non-exhaustive list of topics include:

- probabilistic and statistical knowledge bases

- ethics and explainability in AI

- exact and approximate probabilistic inference

- statistical relational learning and causality

- unifying deep learning and probabilistic learning methods

- probabilistic programming

- numerical optimization

- automated planning and high-level programming

- reinforcement learning and learning for automated planning

- cognitive robotics

- automated reasoning

- modal logics (knowledge, action, belief)

- multi-agent systems and epistemic planning

- integrating causality and learning

For example, our recent work has touched upon:

- morality in machine learning systems

- tractable learning with relational logic

- deep tractable probabilistic generative models

- learning with missing data

- program learning for explainability

- implementing fairness

- model abstraction for explainability

- strategies for interpretable & responsible AI

Faculty: Vaishak Belle

Postdoctoral fellows and PhD students:

- Miguel Mendez Lucero, interested in neuro-symbolic AI

- Jonathan Feldstein (with James Cheney), interested in neuro-symbolic AI

- Daxin Liu (Postdoctoral fellow with Royal Society), interested in probabilistic modal logics

- Jessica Ciupa (Masters by Research), interested in RL

Alumni:

- Benedicte Legastelois (Postdoctoral fellow with TAS project collaborators), interested in explainability

- Andreas Bueff (PhD 2023), interested in tractable learning and reinforcement learning

- Giannis Papantonis (PhD 2023), interested in causality

- Ionela-Georgiana Mocanu (PhD 2023), interested in PAC learning

- Paulius Dilkas (PhD 2022), interested in model counting

- Xin Du (Postdoctoral fellow with TAS project collaborators), interested in explainability

- Amélie Levray (Postdoctoral fellow 2018-2019), interested in tractable learning with credal networks

- Eleanor Platt (research associate), interested in explainability

- Amit Parag (MscR 2019), interested in tractable models and cosmological simulations

- Rafael Karampatsis (Postdoctoral fellow 2019-2021), interested in ML interpretability

Associates:

- Sandor Bartha (with James Cheney, PhD 2023), interested in program induction

- Gary Smith (with Ron Petrick), interested in epistemic planning

- Xue Li (Postdoctoral fellow with ELIAI), interested in misinformation

- Eddie Ungless (with Bjorn Ross), interested in NLP and bias

- Samuel Kolb (PhD 2019, KU Leuven, with Luc De Raedt), interested in inference for hybrid domains

- Davide Nitti (PhD 2016, KU Leuven, with Luc De Raedt), interested in machine learning for hybrid domains

Visitors:

- Esra Erdem, Sabanci University

- Yoram Moses, Technion

- Brendan Juba, Washington University in St. Louis

- Loizos Michael (via the Alan Turing Institute), Open University of Cyprus

- Till Hoffman, RWTH Aachen University

MSc Students:

If you are an Informatics MSc student at the University of Edinburgh, I supervise a number of theses on above topics. See, for example, the publications of other MSc students, including Stefanie Speichert (ILP in hybrid domains), Laszlo Treszkai (generalized planning), Lewis Hammond (moral responsibility), and Michael Varley (Fairness).